Web scraping involves extracting data from websites, making it a powerful tool for gathering information efficiently. It's widely used in industries for market analysis, price tracking, and more.

However, before diving into web scraping, it's crucial to verify if a website allows it. Scraping without permission can lead to legal and ethical issues, such as violating a website’s terms of service or intellectual property rights.

Checking these aspects beforehand ensures compliance and prevents potential repercussions.

Check the Website’s Robots.txt File

The robots.txt file is a text file that tells web crawlers which pages or sections of a website can or cannot be accessed. It's a crucial tool for regulating web scraping.

To locate it, simply add /robots.txt to the website's URL (e.g., example.com/robots.txt). The file will list rules for different user agents, such as web crawlers.

- User-agent: Specifies the crawler the rule applies to.

- Disallow: Indicates sections that are off-limits for scraping.

User-agent: *

Disallow: /private/This means all crawlers should avoid the /private/ section of the site.

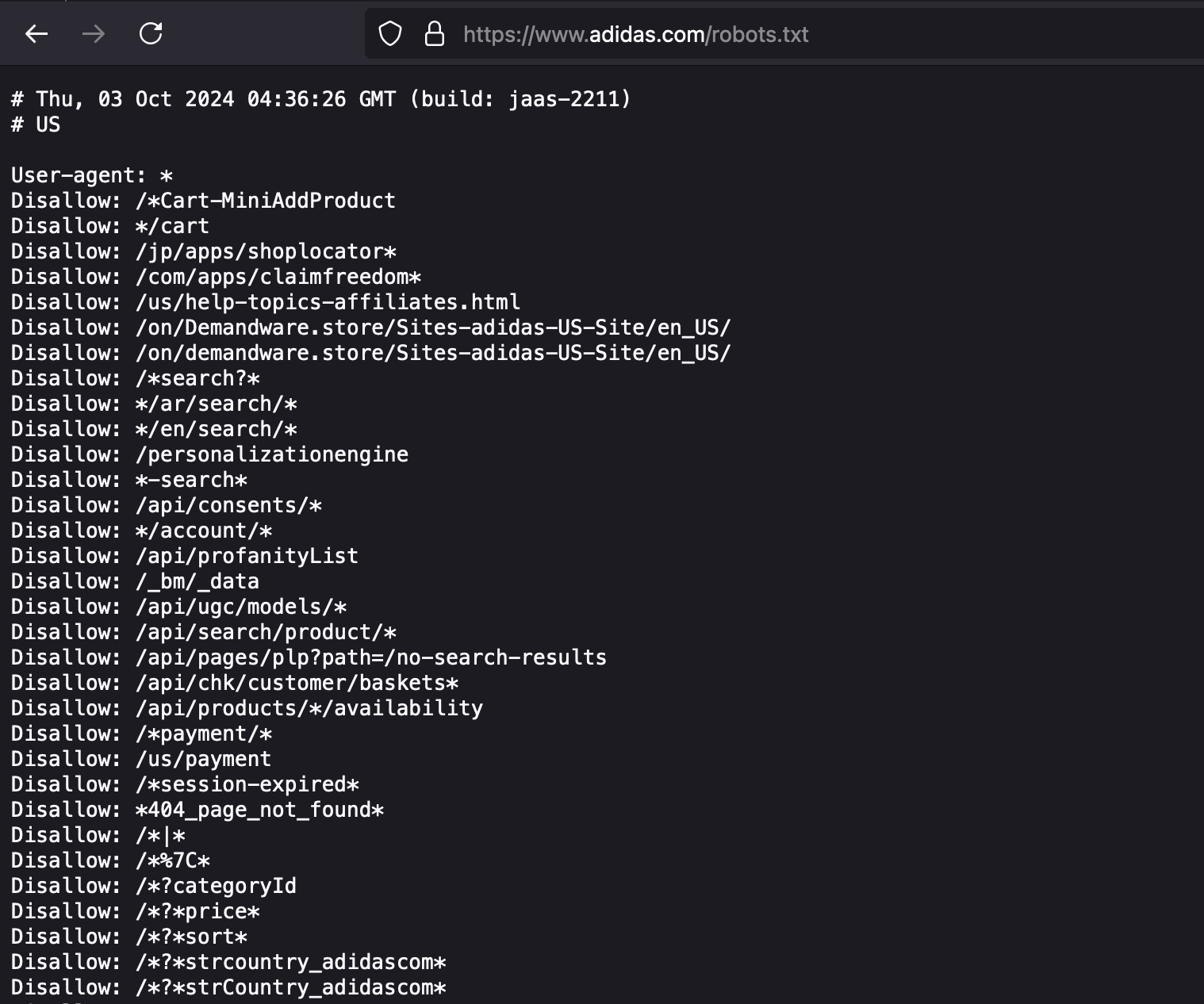

For example, if we take the Adidas website and enter the /robots.txt at the end, like this: https://www.adidas.com/robots.txt

We get the following result:

The Adidas page has multiple pages that the web crawler is not allowed to scrape.

Review the Website’s Terms of Service

Before scraping, it's essential to review the Terms of Service (ToS) to ensure you're compliant with the website's rules. Some websites explicitly prohibit scraping, while others may permit it under certain conditions.

Look for key phrases such as “scraping,” “data extraction,” or “automated tools.” These terms often clarify the website’s stance on web scraping.

Example:

A website’s ToS might state:

"The use of automated tools to extract data is prohibited without prior written consent."

This means you must obtain permission before scraping their data to avoid potential legal issues.

Taking the same example of the Adidas US website, when looking through the Terms and Condition, we get the following.

Analyze HTTP Headers and Meta Tags

Using developer tools, you can check for X-Robots-Tag headers or meta tags that signal restrictions on web crawling and scraping. Open the browser’s developer tools (usually by pressing F12) and navigate to the Network tab. Look for the HTTP response headers or inspect the page’s HTML for meta tags.

A “noindex, nofollow” tag, for instance, tells search engines not to index or follow the page, implying it’s not meant for scraping.

Example:

<meta name="robots" content="noindex, nofollow">This tag indicates that the website prefers not to have its content scraped or indexed.

Check for CAPTCHA or Anti-Bot Measures

Websites often use CAPTCHA, rate limiting, and bot detection systems to prevent automated scraping. These tools distinguish between human users and bots, making it difficult or impossible for scrapers to access data.

If a website frequently presents CAPTCHA challenges or limits access based on high traffic, it’s a strong indication that scraping might not be permitted. Similarly, bot detection systems, like Cloudflare, block suspicious behavior, such as repeated requests from the same IP address.

Example:

If you encounter a CAPTCHA challenge or a Cloudflare warning after multiple requests, the website likely restricts scraping attempts.

Monitor Rate Limiting and IP Blocking

Rate limiting is a technique used by websites to control the number of requests a user can make in a given time frame. When sending multiple requests, watch for responses that slow down or block further access—this is a sign the website limits scraping activity.

Websites may throttle or block your IP if they detect rapid or excessive requests, which indicates scraping might not be allowed.

Example:

If a site returns HTTP 429 (Too Many Requests) after a series of fast requests, it’s likely using rate limiting, signaling restrictions on scraping.

Reach Out to the Website Owner

Contacting the website owner is one of the best ways to obtain clear permission for scraping. It avoids legal issues and ensures compliance with their policies.

When reaching out, be polite and transparent about your intentions. Explain how you plan to use the data, why it's valuable to you, and how you'll respect their terms. Offering to follow their guidelines and limit requests can build trust.

For example you could use the following template:

Dear [Website Owner],

I’m writing to request permission to scrape data from your website for [purpose]. I assure you that my usage will comply with your terms, and I will limit my requests to avoid server strain. Please let me know if this is permissible.

Sincerely,

[Your Name]

Importance of Following Ethical Practices and Legal Guidelines

When engaging in web scraping, it’s crucial to adhere to ethical practices and legal guidelines. Scraping without permission can lead to legal consequences, such as lawsuits or IP bans, and can harm the website’s functionality. Always respect a site’s robots.txt, Terms of Service, and anti-scraping measures. By obtaining consent and following ethical standards, you ensure your scraping activities are responsible, legally compliant, and sustainable in the long run.

For businesses looking to streamline their price scraping efforts and ensure compliance, partnering with an expert can make all the difference. DataHen offers tailored web scraping solutions designed to help you gather actionable price data efficiently and legally.