Introduction to Data Quality Challenges

In the contemporary era, where data-driven strategies have become the cornerstone of business decision-making, the quality of data assumes a pivotal role. The accuracy, completeness, and reliability of data directly influence the effectiveness of business insights and decisions. However, ensuring high data quality is not without its challenges, which can significantly impact an organization's operational efficiency and strategic outcomes.

Why Data Quality is Important to an Organization

Reduction of Operational Costs: High-quality data ensures lower mailing costs by reducing undeliverable mail. Accurate customer data prevents the need for resending packages and can even qualify the organization for postage discounts, leading to significant cost savings.

Enhancement of Customer Relations: Reliable data allows for a deeper understanding of customers, enabling personalized communication and preemptive service. This leads to improved customer satisfaction, loyalty, and a stronger relationship with the brand.

Consistency Across the Organization: A robust and consistent data quality strategy ensures consistency in data across various departments. This prevents issues like duplicate records and data silos and facilitates better communication and coordinated efforts within the organization.

Improved Marketing Efficiency: Quality data allows for more targeted and effective marketing campaigns. By understanding the demographics and behaviors of the ideal customer base, resources are utilized more efficiently, avoiding wasteful broad-spectrum marketing.

Enhanced Customer Service: High data quality is fundamental for personalizing customer interactions, anticipating customer needs, and resolving issues efficiently. Accurate and up-to-date customer information enables tailored services and quicker, more effective problem resolution, leading to a better overall customer experience.

Common Challenges in Maintaining Data Quality

Maintaining high data quality is a multifaceted challenge that involves various aspects:

Volume and Variety: The sheer volume and diversity of data collected from different sources add complexity to data management. Ensuring consistency and accuracy across such vast datasets is a daunting task.

Data Decay: Over time, data can become outdated or irrelevant. Keeping up with the dynamic nature of data and continuously updating it is a significant challenge.

Integration Issues: As organizations use various systems and software, data integration often becomes problematic. Disparate systems can lead to inconsistencies and data silos.

Human Error: Manual data entry or handling is prone to errors. These errors can propagate through datasets, leading to incorrect analysis and conclusions.

Lack of Standardization: Without standardized data formats and protocols, maintaining data quality becomes more complicated. This leads to challenges in data compatibility and integration.

Data Security and Privacy Concerns: Ensuring data quality also involves safeguarding it from breaches and unauthorized access, which can compromise data integrity.

Complexity in Data Cleaning: Identifying and correcting errors in large datasets is time-consuming and complex, often requiring specialized skills.

"Faced with the ever-growing challenges of data management?"

The Emergence of AI in Data Management

In the realm of data management, the advent of Artificial Intelligence (AI) marks a transformative era. AI has not only redefined the possibilities within this domain but has also addressed some of the most persistent challenges in data quality and handling inconsistent data. This shift towards AI-centric data management strategies is driven by the need to handle increasingly complex and voluminous data sets more efficiently and accurately.

Redefining Data Processing and Analysis

AI algorithms are uniquely capable of processing large volumes of data at speeds unattainable by human data analysts. This rapid processing ability is crucial in a world where data generation is continuous and expansive. AI systems can analyze complex patterns, trends, and anomalies in a data set, providing insights that would be difficult, if not impossible, to discern manually. This level of analysis is essential for making informed decisions and gaining a competitive edge in the market.

Enhancing Data Accuracy and Quality

One of the most significant impacts of AI in data management is its ability to improve data quality. AI algorithms are adept at identifying inaccuracies and inconsistencies in data sets. They can perform tasks such as data cleansing, validation, and enrichment, which are vital for maintaining high data quality standards. By automating these processes, AI reduces the likelihood of human error in data quality rules and ensures a higher degree of accuracy in data records.

Predictive Analytics and Forecasting

AI has brought predictive analytics to the forefront of data management. By analyzing historical data, AI can make predictions about future trends and behaviors of data consumers, allowing organizations to make proactive decisions. This ability is invaluable in areas such as market analysis, customer behavior prediction, and risk management.

Customization and Personalization

AI enables a more personalized approach to data handling. It can tailor data management processes to the specific needs of an organization, taking into account the unique characteristics of the data they handle. This customization extends to customer interactions, where AI can help personalize marketing efforts and customer service based on individual customer data.

Overcoming Traditional Limitations

Traditional data management often struggles with issues like data silos, where data becomes trapped in one part of an organization and is inaccessible to others. AI helps in breaking down these barriers, ensuring data flows seamlessly across different departments and systems. This interconnected approach to data analytics leads to a more holistic view of data and its implications across the organization.

"Confused between Data Scraping and Data Crawling"

Read: Data Scraping vs Data Crawling. What is the Difference?

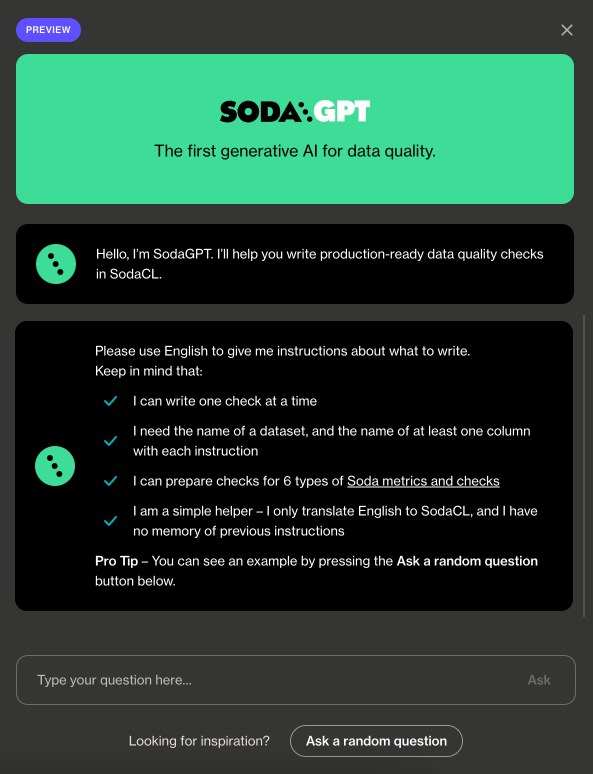

Introducing SodaGPT: A No-Code AI Solution

In the evolving landscape of data management, SodaGPT emerges as a groundbreaking solution, marking a significant leap forward in the realm of data quality assurance. This innovative tool harnesses the power of Artificial Intelligence (AI) to offer a no-code solution that caters to both technical and non-technical users alike. SodaGPT represents a paradigm shift in how organizations approach data quality, making it accessible, efficient, and user-friendly.

A Bridge Between Data and Users

SodaGPT stands out as a unique tool that democratizes the process of data quality testing. By enabling the translation of natural language into production-ready to determine data quality checks, it allows individuals without coding expertise to actively participate in maintaining data accuracy. This feature is particularly revolutionary as it lowers the barrier to entry for data quality management, enabling a broader range of professionals to contribute to this critical aspect of data handling.

Empowering Organizations with AI

At its core, SodaGPT utilizes proprietary generative pre-trained transformer technology to automate and simplify the data testing process. This technology is adept at understanding user inputs in natural language, translating them into complex data quality checks without the need for coding. This not only accelerates the test creation process but also ensures that the checks are aligned with specific business rules, requirements, and data standards.

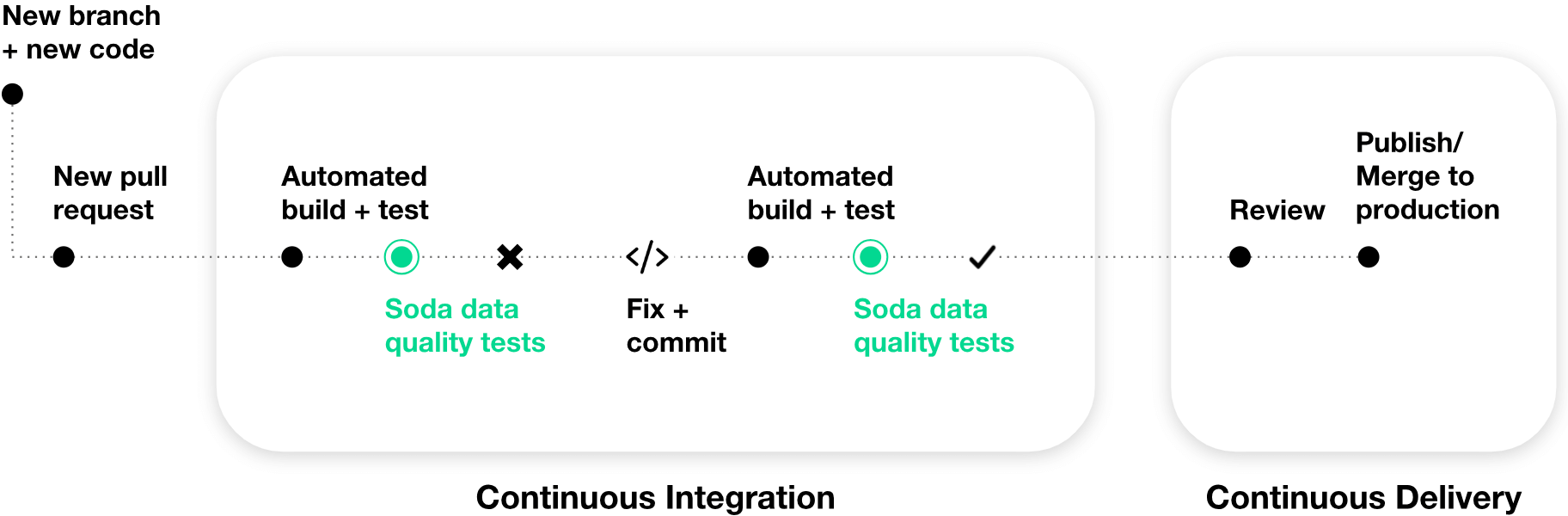

Enhancing Data Quality Early in the Lifecycle

SodaGPT advocates for a 'shift left' approach in data testing, encouraging the assessment of data quality as early as possible in the data lifecycle. This proactive stance ensures that data issues are identified and addressed at the initial stages, preventing them from escalating into larger problems that could impact data-driven products or result in downstream complications. By integrating early in the development lifecycle data quality characteristics, SodaGPT helps in creating more reliable and robust data pipelines, ensuring that the data feeding into business processes is of the highest quality from the outset.

Security and Privacy at the Forefront

Recognizing the critical importance of data security and privacy, SodaGPT is designed with a security-first approach. Being an entirely homegrown solution, it ensures that the same high level of privacy and protection is extended to the data as in Soda Cloud. The SOC-2 Type 2 Certification further underlines its commitment to safeguarding data, ensuring secure user input and reliable AI output.

How SodaGPT Works

SodaGPT revolutionizes the process of data quality testing by leveraging the power of generative AI. As an AI-driven assistant, it simplifies the creation of data quality checks, making it an invaluable tool for any organization focused on maintaining high data standards.

Source: SodaGPT

Utilizing SodaGPT in Your Workflow

To begin using SodaGPT, simply log into your Soda Cloud account and access the tool through the "Ask SodaGPT" button found in the main navigation menu. Once there, users can input instructions in plain English, and SodaGPT will generate precise, syntax-correct checks in Soda Checks Language (SodaCL). These checks are designed to seamlessly integrate into your data pipeline, development workflow, and Soda Agreements, helping to identify and prevent potential data quality problems and issues before they can cause any downstream impact.

For new users, Soda Cloud offers a 45-day free trial, providing an excellent opportunity to explore SodaGPT's capabilities.

Instruction Guidelines and Capabilities

When interacting with SodaGPT, users are advised to follow specific guidelines to achieve the best results. Instructions should be provided in English, and it's important to note that SodaGPT is designed to produce one data quality check at a time, exclusively outputting in SodaCL. The current scope of SodaGPT includes support for the 'fail' condition, while it does not yet accommodate the 'warn' condition. To optimize the tool’s output, users should include in their instructions the name of the dataset and at least one column name within that dataset.

SodaGPT specializes in a variety of SodaCL checks, including checks for missing dimensions of data quality, validity, freshness, duplicates, anomaly scores, schema, numeric aggregations (such as average, sum, maximum, etc.), group by, and group evolution. It's also important to remember that SodaGPT does not retain a history of interactions and therefore cannot reference past questions or responses.

Source: SodaGPT

About SodaGPT's AI Technology

SodaGPT is built on Soda’s proprietary technology, which translates natural language instructions into SodaCL checks. This technology is distinct from GPT3, GPT4, ChatGPT, or OpenAI, as it is based on a specialized Large Language Model (LLM) developed from the open-source Falcon-7b model. Currently, this model does not learn from user inputs, ensuring that sensitive information from one user is never exposed to another.

Additionally, SodaGPT operates with a strict privacy protocol, only processing the instructions entered into the chat and not collecting or storing any other data. User inputs are not shared with third parties, maintaining the utmost privacy and security.

In essence, SodaGPT stands as a cutting-edge tool, bringing simplicity and precision to the complex task of data quality testing. Its user-friendly interface, combined with advanced AI capabilities, makes it an essential component for any organization striving to ensure the integrity and reliability of its data.

The Future of Data Quality Testing with AI

As we stand at the cusp of a new era in data management, the role of Artificial Intelligence (AI) in the future of data quality testing is both promising and transformative. The advent of tools like SodaGPT is just the beginning of what promises to be a significant evolution in how we handle, process, and maintain the integrity of data.

Predictive and Proactive Data Management

In the future, AI will not only streamline data quality testing but will also likely predict potential issues before they arise. The shift from reactive data governance to proactive data management, powered by AI, will enable organizations to anticipate and mitigate data quality issues, significantly reducing the risk of errors and the costs associated with them. Advanced AI algorithms could analyze historical data trends to forecast future anomalies, allowing organizations to take preemptive action.

Continual Learning and Adaptation

Emerging AI technologies are expected to continuously evolve, learning from interactions and adapting to new data environments. This means that AI systems will become more efficient and effective over time, further reducing the need for human intervention in data quality checks. As AI models become more sophisticated, they will be able to handle more complex data structures and relationships, making them invaluable in diverse industries and applications.

Integration of AI Across Data Lifecycle

AI will likely be integrated more deeply and seamlessly across the entire data lifecycle, from collection and storage to analysis and reporting. This comprehensive integration will ensure that good data quality is maintained at every stage, improving overall data reliability and utility for decision-making.

Enhanced Collaboration and Accessibility

Future AI-driven big data and quality tools are poised to become more collaborative and accessible, enabling a wider range of users to engage in data quality management. By breaking down technical barriers and simplifying complex processes, these tools will democratize data quality efforts, allowing various stakeholders, regardless of their technical expertise, to contribute effectively.

Ethical and Secure Data Handling

As AI takes a more prominent role in data quality testing, ethical considerations and data security will become even more critical. Future developments will need to prioritize transparency, privacy, data profiling, and ethical use of AI, ensuring that data is not only accurate and reliable but also handled responsibly.

In conclusion, the future of data quality testing with AI holds immense potential. It is poised to bring about a significant transformation in how data is managed, making it more reliable, accessible, and actionable. With tools like SodaGPT leading the charge, we are stepping into an exciting future of data quality dimensions, where AI empowers organizations to harness the full potential of their data, driving innovation, efficiency, and success.

Empower Your Data-Driven Strategies with DataHen's Custom Web Scraping Services

DataHen specializes in custom web scraping and data extraction services, ensuring that your organization has access to the most up-to-date and comprehensive data available. Whether you're looking to fuel your AI tools with high-quality data or seeking insights to drive your business decisions, DataHen provides a reliable and efficient solution. Explore DataHen's web scraping services today and take the first step towards empowering your data-driven strategies with the full data quality metrics, and precision they deserve.